Does Camera Bit Depth Matter?

A question to this effect was recently posted to ImageMuse – “A discussion group of cultural heritage imaging”. If you’re involved in heritage digitization and aren’t yet an ImageMuse member, we would encourage you to join. It’s a supportive and informative place for professional development and networking.

This question touches on several closely-related topics, and it’s easy to oversimplify the relationship between them.

- Bit Depth from the Sensor

- Image Noise

- Dynamic Range

- An institution’s needs

- Bit Depth of Derivatives (e.g. TIFFs)

- Digitization Standards

“In some cases Bit Depth matters a great deal. In this close up of a Finlay Photographic Plate the darkest areas of the image are so dark that they measure 1-2 RGB values (<1 L*) on a standard 0-255 scale. They are visually black. But a high quality 16-bit camera can capture accurate and useful data into these dense areas of the frame, allowing researchers to see content that would be obscured even in careful in person viewing. Note: the mosaic grid pattern in the image is a part of the object; Finlay Photographic Plates used a color mosaic not unlike modern digital sensors. See here for more information.”

“In some cases Bit Depth matters a great deal. In this close up of a Finlay Photographic Plate the darkest areas of the image are so dark that they measure 1-2 RGB values (<1 L*) on a standard 0-255 scale. They are visually black. But a high quality 16-bit camera can capture accurate and useful data into these dense areas of the frame, allowing researchers to see content that would be obscured even in careful in person viewing. Note: the mosaic grid pattern in the image is a part of the object; Finlay Photographic Plates used a color mosaic not unlike modern digital sensors. See here for more information.”

What’s Behind this Question?

The underlying goal is to produce an image that faithfully comports to the original object, to serve as a surrogate for in person viewing. Noisy shadows, clipped highlight detail, and gradients that have artificial banding are all examples of image quality issues which would make the image less faithful to the object.

Guidelines such as FADGI and ISO include metrics for image noise and dynamic range. These metrics are included when you measure a target such as the DT NGT2, IE UTT, or FADGI 19264 target in software like Golden Thread. So it’s tempting to think we can skip the technical details and simply shoot an image and check its compliance with a given FADGI or ISO level. Unfortunately, while that’s a good starting point, it’s not quite that simple.

Targets are Not Enough

The tests in FADGI and ISO for image noise and tonal smoothness are not especially thorough and can be easily fooled. For example, otherwise unacceptable noise can sneak through testing by means of software noise reduction (which leads to ugly artifacts and loss of fine detail in real subject matter). Banding is also not perfectly detected in test targets – systems that show excessive banding in the smooth blue sky of a glossy photographic print may not show banding on the grayscale ramp of the 19264 target. Transmissive targets are especially weak here as they often have less contrast (a less intense DMax) than high-control transmissive film or glass plates.

Moreover, tonal smoothness (aka banding) is not yet implemented in most image quality analysis software and the targets and software used to evaluate transmissive materials aren’t as mature as those used in reflective imaging. These tests often miss flare and noise that would show up in real world subject matter. So in addition to measuring a target against digitization standards, it’s sensible to review real-world images from a given system before accepting the results, especially if it’s a system you’ve not previously worked with.

Does a Higher Bit-Depth Camera Guarantee Better Quality?

Measuring noise and dynamic range directly (via targets or real-world subject matter) is important because camera bit depth, in and of itself, is not a guarantee of quality in these areas. Most modern cameras read from their sensors at 10, 12, 14, or 16-bit. Usually a 16-bit camera system will have higher dynamic range, lower noise, and better smooth gradients compared to a camera with lower bit depth, but that is not guaranteed to be the case. How the camera is used matters as well – an underexposed raw from a 16-bit camera might well contain more noise than a properly exposed raw from a 14-bit camera. Moreover two systems with the same sensor bit depth may have significantly different noise or dynamic range. For example, older 16-bit cameras from the 2010s such as the Phase One IQ180 produced images far noisier than a modern 16-bit camera like a Phase One iXH. So camera bit depth should not be used as a lazy measure of quality, though it might be useful in some contexts as a minimum qualification.

Why Aren’t All Cameras 16-bit?

The bit-depth of the camera is an engineering trade off. For general photography (e.g. consumer photos of cats and babies) a 16-bit sensor is not just overkill, it also eats up battery power, takes longer to record each image, and adds expense that would be better spent on other components. It would be bad engineering to use a 16-bit sensor in a consumer camera.

It’s not laziness or poor engineering to make a camera with a 12-bit sensor; it’s the appropriate engineering choice if the use case doesn’t benefit from a higher bit depth. In cameras where weight and size is important, or multiple frames-per-second of speed are desirable, or a modest increase in retail price would make the product uncompetitive, a lower bit depth sensor is the right choice. But heritage digitization is a use case that often calls for such higher bit-depth.

Flare

Notably, if flare is not properly controlled then deep shadow detail will be quickly masked by flare. The lens must be clean (no fingerprints or dust) on both sides of the lens. A good lens shade is important. The lens itself must be high in quality – cheaper consumer lenses and industrial lenses often use cheap lens coatings or skimp on the internal baffling to reduce flare from forming inside the lens. Finally, the environment, operator’s clothing, and shooting surface should be dark. When shooting transmissive material the light source should be tightly gated (masked around the subject) so that light is not proliferating around the object.

Noise

Digital noise, mostly the result of heat affecting the nominal performance of the pixels, can also obfuscate shadow detail, thus decreasing the effective dynamic range. For example, early medium format cameras were natively 16-bit, but used CCD sensors that produced so much heat that several of those bits were just noise. Modern high-end systems like the Phase One iXH 150mp use BSI (back side illuminated) cmos sensors that produce a much cleaner signal.

Newer cameras such as the Phase One IQ4 150mp on a DT RCam offer additional options to decrease digital noise. For example you can use Dual Exposure which samples a brighter and darker exposure into a single raw file or Automatic Frame Averaging. In either case, from the user perspective you push the trigger once and get one raw file, but under the hood the way the data is sampled to get that raw file means that the data in the deep shadows is even cleaner than normal, increasing the effective dynamic range of the sensor.

The Material and Institution

The type of collection, and the needs of the institution are also important to consider here. If you’re digitizing modern circulation material from general collections at the request of a sight-impaired patron then a low bit-depth system is almost surely fine; OCR for modern printed materials can work surprisingly well on 1-bit data let alone 8-bit or 12-bit. At the other end of the extreme, contrasty transmissive materials such as a dense glass plate may challenge even the best 16-bit systems. In general, materials that are contrasty or which the user might adjust after capture (e.g. photographic color negatives or documents with faded text) require better noise, dynamic range, and tonal smoothness characteristics.

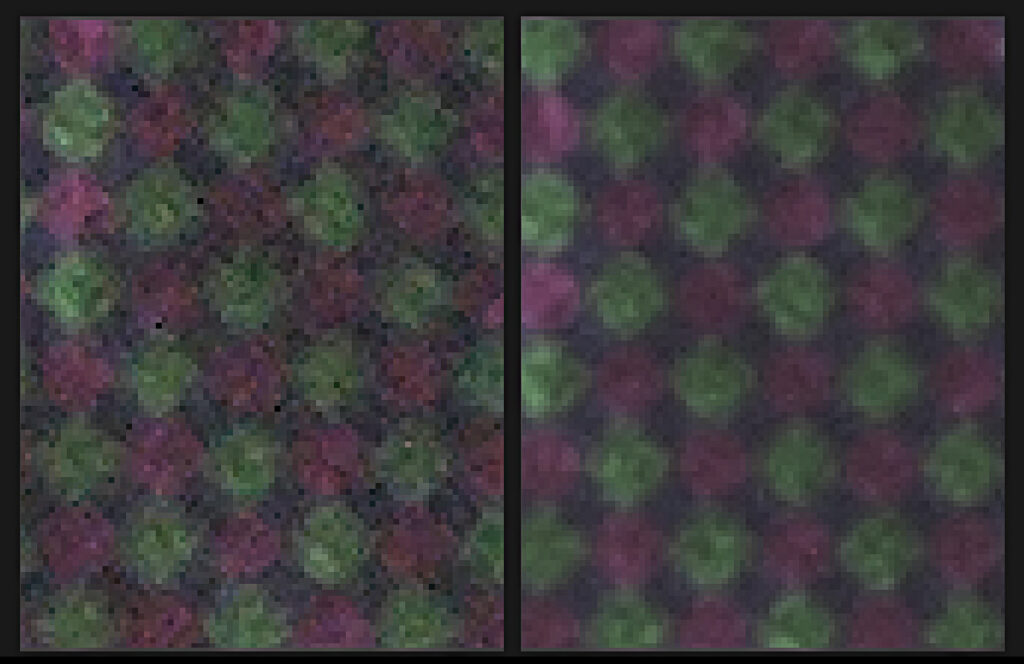

“Comparison of noisy 14-bit data to clean 16-bit data for detail of a Finlay Photographic Color Plate. This area of the frame was extremely dense and is shown with a four stop increase in exposure. The left is a 14-bit capture, while the right is a 16-bit capture with Frame Averaging used to push noise even lower. Dense glass plates are an example of heritage material which warrant 16 bits of clean data.”

“Comparison of noisy 14-bit data to clean 16-bit data for detail of a Finlay Photographic Color Plate. This area of the frame was extremely dense and is shown with a four stop increase in exposure. The left is a 14-bit capture, while the right is a 16-bit capture with Frame Averaging used to push noise even lower. Dense glass plates are an example of heritage material which warrant 16 bits of clean data.”

Output Derivatives

All of the above is about the camera (or scanner) itself. Output derivatives such as TIFFs can be either 8-bit or 16-bit. So for example a raw file from a 12-bit camera might be processed into a 16-bit TIFF; nothing is gained by going to that higher bit-depth (the image only has 12-bits of quality) but it does avoid the loss of quality that would come from making an 8-bit TIFF. Specific bit-depth recommendations for derivatives based on material and star level are provided by FADGI. The only downside of selecting 16-bit encoding for a derivative is that the resulting file is larger. For this reason it is common that the Preservation Digital Object is 16-bit while Access Derivatives are saved as 8-bit.

“24-bit” and “48-bit” scanners

One final note: in decades past (e.g. 1990s and earlier) when it was more common to find monochrome scanners it was common practice to refer to the bit depth of a color scanner in 3-channel terms to distinguish it from a monochrome scanner. For example, a color scanner with an 8-bit sensor would be called a “24-bit scanner” (8 bits for Red + 8 bits for Green + 8 bits for Blue = 24 bits). Moreover some imaging systems are labeled according to the bit depth of their TIFFs rather than their sensor bit depth. That means you can find rather confusing situations wherein an older low-quality scanner with a 10-bit sensor was marked “48-bit” (because it can output a 16-bit RGB TIFF from the 10-bit sensor data). This is a less common practice today, though the FADGI document (page 107 of FADGI 2023) still uses language about bit depth that is framed in this manner.

What is a Bit?

The foundational language of a digital file is the binary unit of data limited to 1 or 0, called a “bit”. In a digital image with only pure-white and pure black pixels this could be represented with just a single bit of data per pixel. For example here is a plus symbol which could be written 010111010.

You can do a surprising amount with just pure black and just pure white. For example, here is an image of a cat that is 100×100 pixels with only 1 bit per pixel. It’s not a highly-detailed photo-realistic high-fidelity image. On the other hand (or paw), it’s very clearly a cat.

Typically more than 1 bit is used per pixel. When bits are grouped together they can represent finer grayscale tones than just pure-black and pure-white. For example, here is the same cat image but with two bits per pixel. With two bits you can have 00, 01, 10, or 11 which correspond to black, dark-gray, light-gray, and white. That is, 2-bits allows 4 tones.

Going to three bits expands the options to 000, 001, 010, 011, 100, 101, 110, 111. That means each pixel can be any of 8 tones. Here is the same cat with three bits per channel. The ear that was comic-book-like (“posterized”) in the 2-bit image above is more organic and natural (good “tonal fidelity” or “tonal smoothness”) at 3-bit.

So the palette or depth of tones available to render the image is directly dependent on the number of bits being used to record the image. That’s “bit depth” – the number of bits which in turn determines how many unique tones are available. The math is simple: increasing bit depth by 1 doubles the number of tones; you have all the same tones available, but with either a 0 or 1 bit afterward which doubles the total.

| Bit Depth | Tones Available |

| X bit | 2^X levels |

| 1 bit | 2 levels |

| 2 bit | 4 levels |

| 3 bit | 8 levels |

| 4 bit | 16 levels |

| 8 bit | 256 levels |

| 12 bit | 4,096 levels |

| 14 bit | 16,384 levels |

| 16 bit | 65,536 levels |

Note that we used “3 bits” above because it helps illustrate the rapid impact of additional bit depth but for engineering reasons it’s very rare to see an odd number of bits (other than 1-bit format for pure black-and-white images).

So far we’ve only looked at monochrome (grayscale) images. If we move to color images we’ll need to store bits in channels specific to red, green, and blue. For a 1-bit 3-channel (RGB) image a given pixel could have red set to 1 or 0, green set to 1 or 0, and blue set to 1 or 0 leading to 8 total combinations (2•2•2):

That leads to a cat image which has a pop-art appearance. It provides a crude understanding that the cat is some shade of red or brown (and not, say, blue or green) and is on some kind of multi-colored background, but not much else.

If we continue to use three channels per pixel but increase the number of bits per channel then we’ll quickly get into a very large number of total possible colors. With 2 bits per channel there can be 4 levels of red, 4 levels of green, and 4 levels of blue which means 64 unique colors (4*4*4).

Here’s our cat in 2-bit 3-channel color (64 colors). We’ll also zoom out to see the rest of this glorious cat art.

On the one hand, there’s plenty of colors to get the gist of the image. On the other hand, the color seems “painted on” in a crude way – there are no subtle transitions between colors, and there is “noise” in the shadows in the top left.

Increasing bit depth to 3-bits per channel (512 colors) does less than you might expect.

There is still plenty of very strong banding.

To produce a “reasonable” image of this cat requires significantly more than 512 unique colors. Here is a rendering at 8-bits per channel (256•256•256 = approx. 16.7 million colors)

Even with 16.7 million colors there are still “problem areas.” For example, here’s a crop of the shadow area in the top left, and adding some contrast to accentuate the issue. You can see banding patterns where subtle amounts of color end abruptly and without any organic smoothness.

But these issues are subtle unless you exaggerate them using post processing adjustments. That is, 8-bit (3-channel) data does a pretty good job for displaying most images as-is.

Why do we need high bit-depth in heritage digitization?

If 8-bit is enough to do a pretty good job displaying most images, why then is there so much fuss about using high bit-depth cameras to digitize heritage materials? There are several reasons for this:

- Non-linearity

- Enhancement

- Contrasty Material (e.g. Slide Film)

- The unknown future

Non Linearity

Cameras are basically photon counters – if twice the light hits them they record a signal twice as strong. But the human eye doesn’t respond quite the same way, so in almost all cases a gamma curve is used to translate what a camera sees into what a person sees. This has the effect of “stretching out” the data in the shadows. For example, for an 8-bit camera, applying Adobe 1998 (gamma 2.2) to raw camera values of 5 and 15 (deep shadows) results in values of 43 and 70, respectively. That means visual content that was recorded by the camera with only 10 steps of precision will be stretched to 27 levels once gamma is applied. That’s of no consequence if the camera’s bit depth is 16-bits, where the number of in-between steps is in the thousands. But this consequence of gamma-translation becomes problematic for shadow fidelity as the bit depth of the camera decreases. This is not a large effect, but it means we need a couple bits of depth more than we otherwise would.

Enhancement

There are use cases, even in heritage imaging, that require “enhancing” the image (at least in a derivative) – for example, increasing the contrast on faint or faded text to improve its legibility. Whenever you apply post processing to a file such as brightening it, adding contrast, or increasing saturation you are stretching the data in that file. Image data with higher bit depth can be stretched more before it shows issues such as banding.

This is especially pronounced in photographic material where the film itself was just a starting point for the final image. A photographic color negative has most of its tone in a very narrow range of orange-ish colors (thanks to the strongly orange colored base). In order to invert and translate this into “normal” color the data is stretched out significantly. Doing this with lower bit depth images will cause artifacts such as banding and lower color accuracy.

Contrasty Material (e.g. Slide Film)

As an extension of “enhancement” sometimes the material itself is so contrasty that it is not fully legible without post processing. For example, many slide film emulsions, scanned in a linear manner, and presented on a monitor, will totally obscure subject matter deep in the shadows which would otherwise be visible to the unaided eye in person (because our eyes are especially great at seeing in contrasty scenes – lest we be eaten by a black bear lurking in a shadow).

The Unknown Future

Heritage digitization is equally focused on the past and the future. Informed by their proximity to collections that date from hundreds or thousands of years in the past, digitization planners are vividly aware of the inherent unknowns of the future. Even for collections and material without an obvious need for higher bit depth it’s a good idea to aim for the highest image quality that can be reasonably accomplished given available resources.

Who knows what currently-unknown future use cases will benefit from higher image quality. For example, current generation AI technologies such as Face Recognition or OCR usually does not require and in many cases does not meaningfully benefit from higher bit depth, but we are only a few years into the unending development of AI. Just 100 years ago digitization in any bit depth was impossible, so it’s hard to anticipate the value of a given bit depth 100 years hence. Bit depth cannot be added after the fact, so opting for the best available bit depth today is responsible stewardship of a collection.

One example of an unknown use case involves the same Finlay Photographic Plates that were used as examples earlier in this article. Having useful detail deep into the shadows allows us to find the physical pixels (each colored area of the glass plate is a pixel, albeit an analogue rather than digital one). That’s an important component of a capture, stitching, and restoration solution for early color plates that we’re working on alongside National Geographic Society and Jan Hubićka of Charles University. More details on that will be released later this year.

Good data finds good uses. When imaging for the future we should aim to make the best data possible.

Summary

Life is rarely 1-bit (black and white) and the most truthful answer to most questions of “does X matter?” is “it depends”.

- Bit depth matters more for high-contrast material such as slide film than for low-contrast material such as paper.

- Generally (but not always) higher bit-depth cameras are higher quality cameras.

- The target-based tests for noise and dynamic range are not especially thorough and can be easily fooled. This is especially true for transmissive imaging.

- Cats are glorious

Doug Peterson | Owner of Digital Transitions, Director of Research and Development

Doug Peterson | Owner of Digital Transitions, Director of Research and Development

Doug Peterson is Co-Owner and Head of R+D and Product Management at Digital Transitions. He holds a BS in Commercial Photography from Ohio University. He is the lead author of a series of technical guidelines and recommendations for cultural heritage digitization, including the Phase One Color Reproduction Guide, Imaging for the Future: Digitization Program Planning, and the DT Digitization Guides for Reflective and Transmissive Workflows. He oversees the DT Digitization Certification training series, and has presented multiple Short Courses at the IS&T Archiving imaging conference. He is a member of the International Standards Organization where he sits on Technical Committee 42 which works on digitization standards.